With the rise of digitalization, data has become one of the most valued resources. Organizations' dependence on data is rapidly growing, so is the need for data processing platforms.

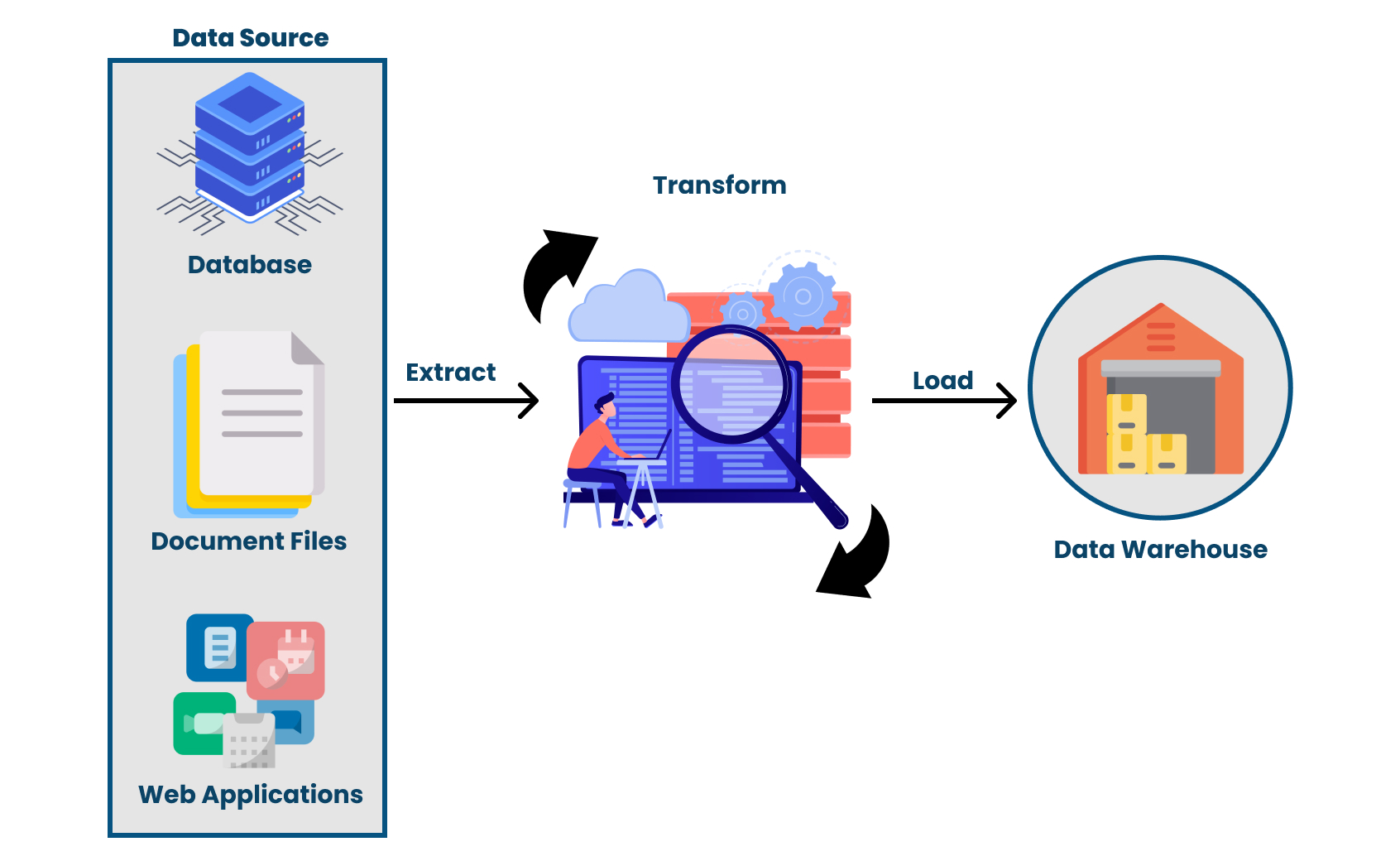

ETL is an acronym for Extract, Transform, and Load. It's a process of extracting data from the source systems, transforming them into a uniform data type, and then loading them into a central repository. For ensuring the quality and accuracy of extracted, transformed, and loaded data, organizations adopt a process called - ETL testing.

Businesses need data to make informed decisions, analyze the company’s performance and back up arguments. The insights that they derive from data are of utmost significance, and any glitch during the data processing can cost millions and more. According to a Gartner report, organizations lose around 15 million USD annually due to poor data. Bad data lead to fallacious information resulting in wrong decisions that may prove costly and potentially tarnish the goodwill of an organization.

To avert it, data needs to be processed and transformed into quality information and delivered to the right people at the right time. This is where the concepts of ETL and ETL Testing come into the picture.

To understand the intricacies of the ETL process, we need to understand a few concepts related to data and how they function under a business model. So, without further ado, let’s get started.

Business Intelligence

Business Intelligence (BI) is a set of methods and tools that an organization uses to access and explore data from multiple source systems to gauge performance and make informed decisions. BI leverages software and services to collect, analyze and transform raw data into records and meaningful information.

Organizations need BI to get an all-inclusive view of the organization's data and use them to drive change, eliminate inefficiencies and adapt their offering to the market.

Database

To simply put, databases are an organized collection of information. It stores and maintains all sorts of data for easy retrieval and modification. Typically, databases record and process the daily transactions of your company in a way that can be easily updated, expanded and modified.

Data Warehouse

A data warehouse is a central repository of all the data collected from multiple sources. It stores historical data in one place and then, that data is used by the organization for creating analytical reports. A data warehouse is a core component of BI as it enables and supports activities like data analysis, reporting, and integration.

Database | Data warehouse |

|---|---|

A collection of related data that represents some elements of real world | A central repository that stores historical and commutative data from single or multiple sources |

Designed to record data | Designed to analyze and study data |

Contains comprehensive data | Contains summarized data |

Uses Online Transactional Processing (OLTP) | Uses Online Analytical Processing (OLAP) |

Slow and less accurate | Fast and accurate |

Constructing a database isn't expensive | Constructing a warehouse is an expensive affair |

Application-oriented design | Subject-oriented design |

Data is dynamic | Data is (largely) static |

ETL

A data warehouse maintains strict accuracy and integrity of data using a process called ETL. The process helps organizations in consolidating and standardizing data into a centralized location and in a format that is readily available for analysis and decision-making.

During the ETL process, information is first extracted from a source such as a database, file, or spreadsheet, then transformed into a format that qualifies the data warehouse’s standards, and finally loaded into a data warehouse.

The data gets transferred in batches from a source to a destination using various ETL tools like Xplenty, iCEDQ, etc. However, during the process, several conflicts emerge at the integration points that need to be resolved by the ETL testers.

ETL testing is the process of validating, authenticating, and qualifying data while averting data duplication and truncation.

ETL Testing

A data warehouse is often dubbed as company’s “single source of truth.” The data stored here are used for various analytical purposes, and any error in the data stored in a data warehouse affects the accuracy of the reports and outcomes. To maintain the sanctity of the warehouse, it is important to meticulously check and test all the integration points and perform troubleshooting wherever required.

ETL testing process ensures that the transfer of data from heterogeneous sources to a unified repository occurs as per transformation rules and meets all requirements. The process also helps in the early detection and mitigation of defects and errors.

ETL testing ensures that nothing gets lost or corrupted along the way as there is a high possibility of that happening. Here are some reasons to mention a few:

- Data is often obtained in several formats and from different (heterogeneous) sources. Before loading, the data is converted into a format that complies with the targeted warehouse design.

- Organizations deal with a colossal amount of data, and it's only growing. Experts believe that the rate at which data is growing has outpaced our ability to examine and organize it.

- The data mapping process that joins data fields in sources and target databases is vulnerable to errors. The issues of data duplicity and compromised quality are common.

Stages Of ETL Testing Process

stages-of-etl-testing

ETL testing is done in multiple phases to confirm if the data was extracted properly, transferred smoothly, and loaded in the desired format. The phases are performed throughout the cycle of the ETL process for smooth transfer of data. Here are the major phases of the ETL testing process:

- Identifying data sources and gathering business requirements: The first step is to understand expectations and the scope of the project. This helps testers understand the business flow and assess the reporting needs of the organization. The process includes identification of the data sources, requirements of the target system, and the degree of transformation required. It’s important to clearly define and document the data model as it will act as a reference for the Quality Assurance (QA) team.

- Assessing and evaluating data sources: At this stage, the team evaluates the source system and assesses the format of the data. Testers perform a data count check and maintain a record of all the primary sources. The record will be later used for data reconciliation checks. Testers will also need the record for curtailing the movement of a duplicate file. Often, in an organization, the same data gets stored in different formats. If the same file, stored in different formats, gets transferred to the data warehouse, it might generate inaccurate and misleading reports.

- Creating test cases and preparing test data: The next step is designing ETL mapping for various scenarios. The design contains a test action plan and solutions for different challenges. The design also includes all the information required for meeting the client's needs and is generally pre-approved by the QA head. The team is also required to write SQL scripts and define the transformational rules.

- Validating extracted data: As the ETL process kicks in, testers too come into action. The first step of ETL is extraction, and at this stage, the testers ensure that all the data have been extracted cleanly and completely. In addition to the supervision of the extraction process, testers keep identifying bugs and prepare a report alongside. It’s essential to detect defects and fix bugs at the initial stage to lessen the chances of a misleading analysis. Most importantly, the cost of finding and fixing bugs is always cheaper in the initial stage.

- Validating data transformation: Here, testers ensure that the transformed data matches the schema of the target repository. The QA team makes sure that the data type syncs with the mapping document.

- Verifying loaded data: Once the data has been taken from the primary source system and converted into the desired format, the data is loaded to the target warehouse. Here, the testers reconcile the data and check for data integrity.

- Preparing summary report: After the test, the QA team prepares a summary report. It contains all the findings of the tests and documents bugs and errors that were detected during the testing process. The report lets decision-makers know the outcome of the ETL testing process.

- Closing and reports: Filing and submitting ETL test closure report.

ETL Testing Challenges

Turning raw data into subject-oriented, nonvolatile, and ready-to-use Business Intelligence isn’t easy. Simply because of the complexity and the volume of the data involved. The data is often stored in one format in the source system and another in destination systems. So, testing the data in different locations leads to numerous challenging situations. Some challenges to watch out for are:

- Inaccurate, corrupt, or duplicate data

- Data loss or truncation during migration

- ETL application inappropriately rejecting relevant data or replacing it with default values

- Lack of an inclusive test bed

- Absence of comprehensive business requirements

- Use of outdated or inappropriate testing tools

- Highly unstable testing environment

- Complex business flow information

- Frequent changes in the requirements

- Arduous and time-consuming execution due to big and inconsistent chunks of data

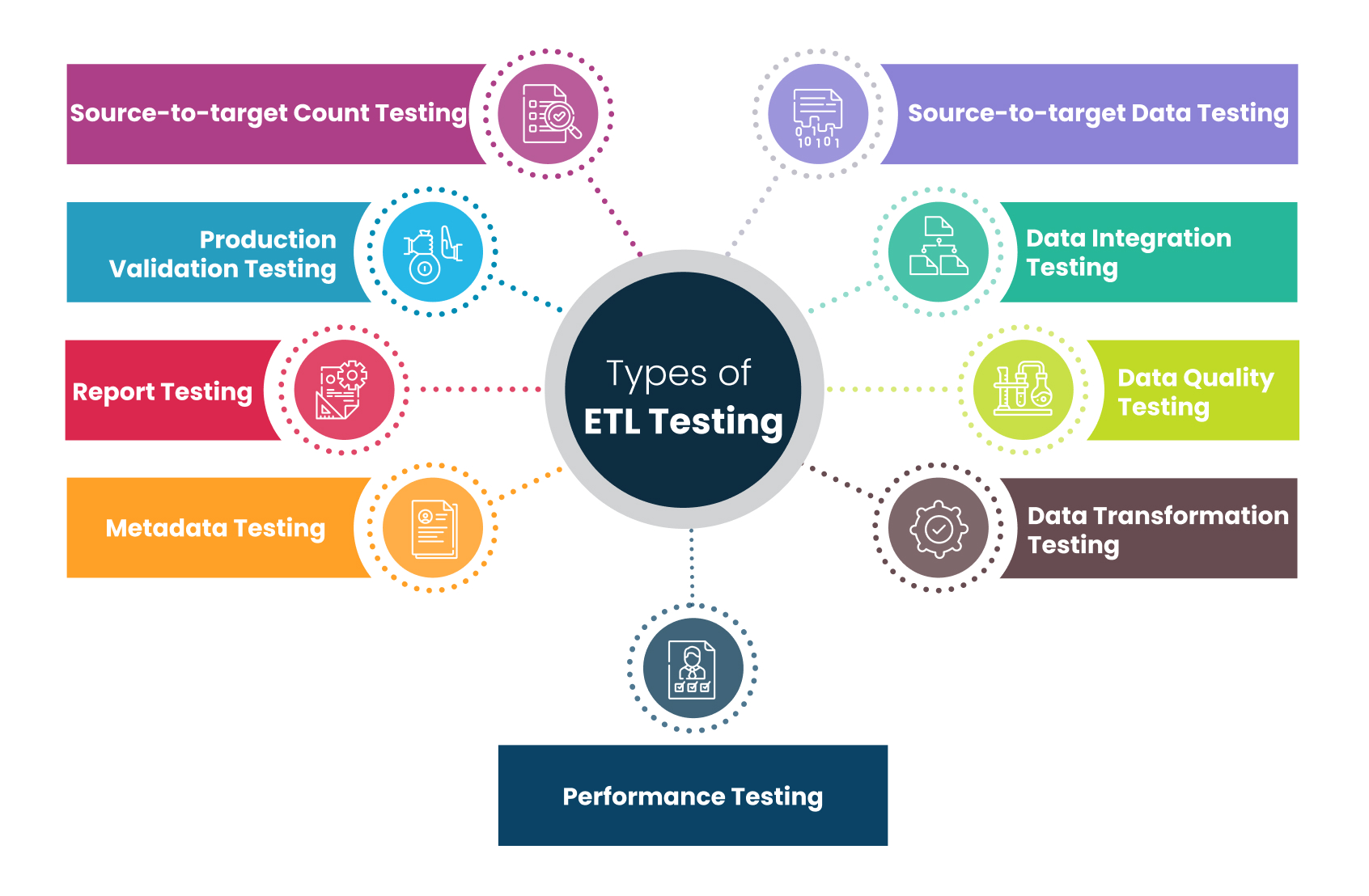

Types of ETL Testing

ETL testing is intended to ensure error-free transfer of data from the source to the target system. It is designed to tackle challenges and address the nuances of the ETL cycle. Various types of tests are executed at different stages, and they can be classified as the following:

Production Validation Testing: In order to obtain accurate reports for analysis, the data used in production should be error-free. This test checks the data transferred to the production system. It includes validating the data in the production system and comparing it against the source data.

Source-to-target Data Testing: In this test, data values stored in the source system are cross-checked with the data stored in the target system. Source-to-target data testing is a time-consuming process and is very crucial for financial and banking projects.

Source-to-target Count Testing: This test is performed to verify whether the data count in the source system is matching the data loaded in the target system. The test gives an estimate of the likely outcome but may not necessarily be accurate or reliable. It doesn't involve minute details like the value, order, and type of the data loaded.

Metadata Testing: This test is performed to check data types, length, indexes, and constraints.

Performance Testing: The test ensures that loaded data is within the prescribed time frame. It also sees if the system successfully handles the current user traffic and checks if it can sustain the expected increase in the number of users accessing this data.

Data Transformation Testing: The test verifies if data is transformed in compliance with business requirements and SOPs. The tester runs multiple SQL queries for each row and then matches the output with the destination data.

Data Quality Testing: This test makes sure that the ETL application rejects default values and reports invalid data. A tester performs a Syntax Test to flag invalid characters, patterns, and incorrect case order, etc., and runs Reference Tests to check if the data is according to the overall architectural design.

Data Integration Testing: In this type of testing, a tester verifies whether data from all the sources are migrated as expected and checks threshold values. The test also involves the integration of data into the warehouse after transformation and loading.

Report Testing: In this test, the accuracy of the data in reports is assessed. Testers make a few calculations and verify the layout and functionality as per the data model.

ETL Testing Tools

There are both manual and automated ways to test the efficiency of an ETL process. For manual testing, SQL query testing is the most preferred method. However, the method is a bit tedious, time-consuming, and prone to errors. Hence, many organizations have started adopting automated ETL testing tools. These tools reduce time consumption and help in maintaining accuracy. Here is a list of the top ETL testing tools:

QuerySurge

It is a tool for automating the testing of data. It validates that data extracted and loaded from the source system remains intact in the target systems as well. The tool easily and quickly detects any mismatch.

It is a tool for automating the testing of data. It validates that data extracted and loaded from the source system remains intact in the target systems as well. The tool easily and quickly detects any mismatch.Xplenty

It is a cloud-based ETL solution that has a point-and-click interface and enables simple data integration, processing and preparation. The powerful tool is capable of cleaning, normalizing, and transforming data while adhering to compliance best practices.

It is a cloud-based ETL solution that has a point-and-click interface and enables simple data integration, processing and preparation. The powerful tool is capable of cleaning, normalizing, and transforming data while adhering to compliance best practices.iCEDQ

The platform automates data-centric processes such as data migration and production. It is used to detect all types of problems that occur during the ETL flow. It verifies, validates, and reconciles the source and destination system files.

The platform automates data-centric processes such as data migration and production. It is used to detect all types of problems that occur during the ETL flow. It verifies, validates, and reconciles the source and destination system files.RightData

RightData helps business and technology teams in improving the performance of ETL projects. It allows users to validate, verify and reconcile data, irrespective of the differences in the data model and data type.

RightData helps business and technology teams in improving the performance of ETL projects. It allows users to validate, verify and reconcile data, irrespective of the differences in the data model and data type.BiG EVAL

It is specifically built to continuously validate and monitor the quality of data. It automates the ETL testing process and provides quality metrics in production.

It is specifically built to continuously validate and monitor the quality of data. It automates the ETL testing process and provides quality metrics in production.

Responsibilities of an ETL Tester

An ETL tester is responsible for checking the accuracy and completeness of the ETL process. They authenticate the data sources, check the precision of data extraction, apply the transformation logic, and load data in the data warehouse. They design, test, and fine-tune the organization's data storage system.

Here are the key responsibilities of an ETL tester:

- Test ETL software

- Test ETL data warehouse components

- Manages backend data-driven tests

- Plan, design, and execute test layouts

- Log the errors and implement solutions for fixing bugs and issues

- Approve design specifications as per business requirements

- Test data transfer

- Write SQL queries for various situations

- Check the test summary report

Conclusion

The success of any Business Intelligence system depends upon the accuracy and performance of the ETL testing.

For businesses of all sizes and industries, the world of data is only getting bigger. Across the globe, data is swelling exponentially and changing how we live our lives. According to a report from IBM Marketing Cloud, “10 Key Marketing Trends For 2017,” 90 percent of the data in the world today was generated in the last two years alone, at 2.5 quintillion bytes of data per day. To put that in context, that’s 2,500,000,000,000,000,000 bytes! The pace is only accelerating with new technological advancements.

However, all this data is essentially a waste if not properly processed. Methods like ETL testing pave the way for efficiently processing and analyzing the data. It unveils the huge reserve of valuable data-driven insights hidden within the clamor.

Amid massive production, enterprises need the right set of tools and practices to move their bulk data from one source to another while safeguarding business integrity. ETL process and testing help organizations enhance productivity and simplify data retrieval. They turn data into a format that can be easily read, accessed, and managed.

Data is the pillar of any organization, and ETL testing supervises the ETL process to maintain the meaningfulness of the data. The process validates and verifies data while thwarting duplication and misplacing. Due to its direct impact on BI, the ETL testing process has become a non-negotiable requirement for organizations. The reliance on automated ETL testing tools is increasing for enhancing productivity and simplifying the retrieval of information from giant heaps of data.