In today's rapidly evolving technological landscape, software development processes are constantly advancing to deliver faster, more reliable, and efficient results.

One such evolution is the advent of autonomous testing, a paradigm where artificial intelligence and machine learning take center stage, minimizing human intervention while optimizing testing processes.

In this article, we will explore the concept of autonomous testing, examining its levels of autonomy, benefits, challenges, and distinctions from traditional test automation.

Understanding Autonomous Software Testing

Autonomous testing refers to the application of artificial intelligence (AI) and machine learning (ML) technologies to perform software testing tasks with minimal human involvement.

The objective is to accelerate the testing process, boost efficiency, and improve the accuracy of defect detection. Unlike manual testing, where human testers write and execute test cases, autonomous testing leverages AI algorithms to generate, execute, and evaluate tests.

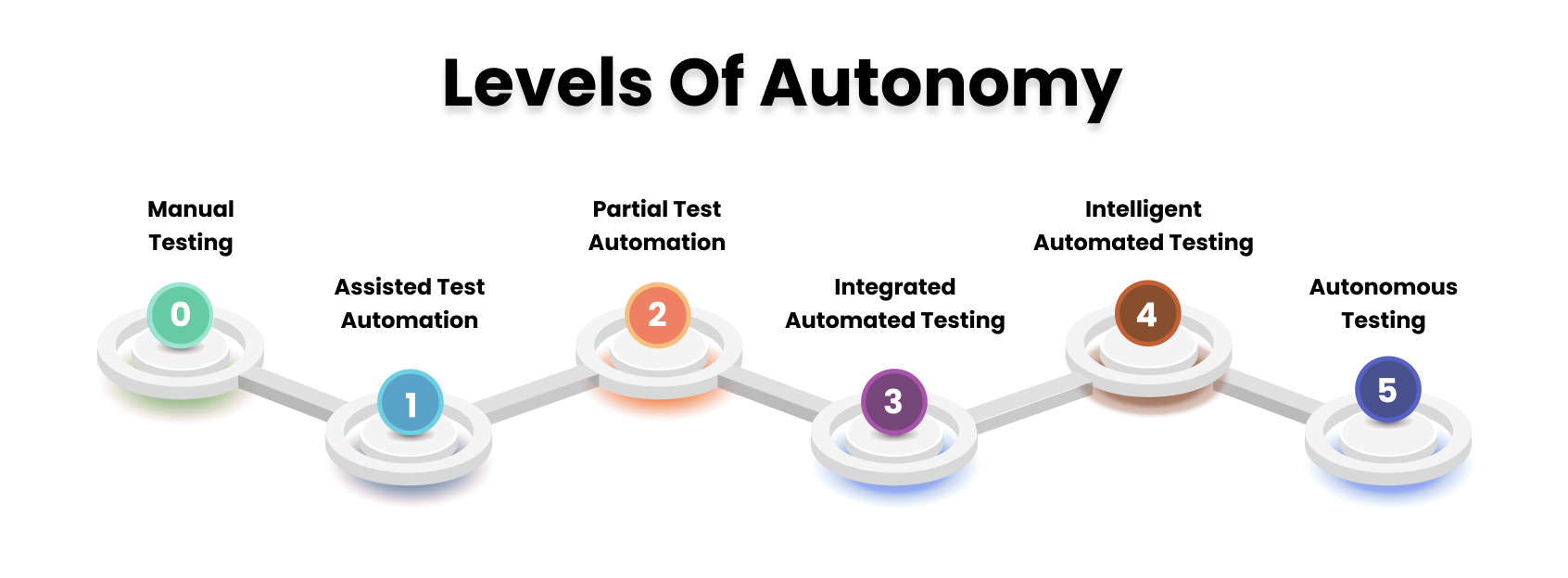

Levels of Autonomy in Testing

Level 0: Manual Testing

Level 0 software testing is a hands-on, manual process where human testers methodically create test cases, execute them, and verify the results. This method significantly relies on human skill, domain knowledge, and thoroughness. While this strategy ensures a comprehensive understanding of the system, it is time-consuming and may not be feasible for large-scale projects.

Level 1: Assisted Test Automation

At Level 1, AI starts to provide assistance, but human testers remain crucial in the design, execution, and validation of test cases.

AI algorithms, on the other hand, help by assessing code modifications and grouping related ones. This improves the efficiency of test case verification by allowing testers to focus on bigger collections of logically related changes.

Level 2: Partial Test Automation

At Level 2, there is a significant enhancement in AI's test automation capabilities. The AI system improves its recognition of contextual information inside the code repository, requiring less human work from testers.

While human testers continue to write test cases, AI-generated test code grows in sophistication, covering a greater range of code scenarios. However, these AI-generated tests may be rudimentary and might not cover all edge cases.

Level 3: Integrated Automated Testing

Level 3 AI engagement signifies a substantial advancement. Human testers still develop and execute tests, but AI takes the lead in comparing changes against a baseline, which could be the existing codebase or a reference version.

Although this amount of autonomy assures consistent and accurate testing, testers are still in charge of managing the overall testing strategy, which includes prioritizing components and deciding on testing methodologies.

Level 4: Intelligent Automated Testing

As we proceed to Level 4, AI's capabilities grow to include the production of test cases with human assistance. AI systems not only validate modifications but also actively participate in creating test scenarios, including those involving complex edge cases.

This simplifies the testing process, expands test coverage, and lessens the pressure on human testers. In complex code environments, the AI system may require developer input or intervention, striking a balance between automation and human oversight.

Level 5: Autonomous Testing

Reaching Level 5, where AI takes complete control of testing activities, marks the pinnacle of the progress. Without direct human involvement, this entails creating, running, and improving test cases.

Capable of handling various software scenarios and complex codebases, the AI system evolves into an adept and self-reliant testing partner. It may even generate test cases for complex code behaviors that surpass human comprehension, potentially eluding even experienced programmers.

This evolution signifies a gradual shift from manual, labor-intensive testing to efficient and automated methods. As AI gains autonomy, testing becomes optimized, resulting in faster feedback, broader code coverage, and superior software quality. Despite increasing autonomy levels, a collaborative AI-human relationship remains vital for a holistic testing approach.

Differentiating Test Automation from Autonomous Testing

Although both test automation and autonomous testing aim to optimize software testing, they differ in terms of human intervention and decision-making:

Test Automation involves scripting test cases and scenarios executed by automation testing tools. Human testers are responsible for creating and maintaining these scripts. Furthermore, decisions regarding testing strategies are primarily human-driven.

Conversely, Autonomous Testing advances further, employing AI not only to generate and execute tests but also to make decisions regarding test scenarios, expected outcomes, and test case creation.

Challenges and Benefits of Autonomous Testing

While the transition to autonomous testing offers a plethora of benefits, it is essential to acknowledge the challenges that arise:

Autonomous Testing Challenges

- Absence of QA Environment: Autonomous testing poses challenges for organizations lacking a robust QA environment, as it cannot utilize live data. The process of creating a staging environment expressly for autonomous testing becomes difficult.

- Maintenance Overhead: Maintenance costs constitute a significant expense for small and medium-sized enterprises (SMEs). To completely test end-to-end user experiences, tests must be regularly updated with new functionality. Resources could be strained, and efficiency might suffer.

- Limitation in Negative Scenarios: The ability of autonomous testing to evaluate negative scenarios is limited. Often, there is a focus on positive scenarios, potentially overlooking critical negative events.

- Test Data Generation: Creating effective test cases requires accurate and diverse data, posing a challenge for AI to simulate a representative range of scenarios.

- Test Assertion Complexity: It might be challenging to ensure that test cases created by AI assert expected results appropriately. Validating the accuracy of AI-generated assertions can be problematic.

- Cost of Training: AI models require case-specific instructions to improve. This necessitates significant resources.

Navigating these challenges is crucial for organizations aiming to fully leverage the potential of autonomous testing in their software development processes.

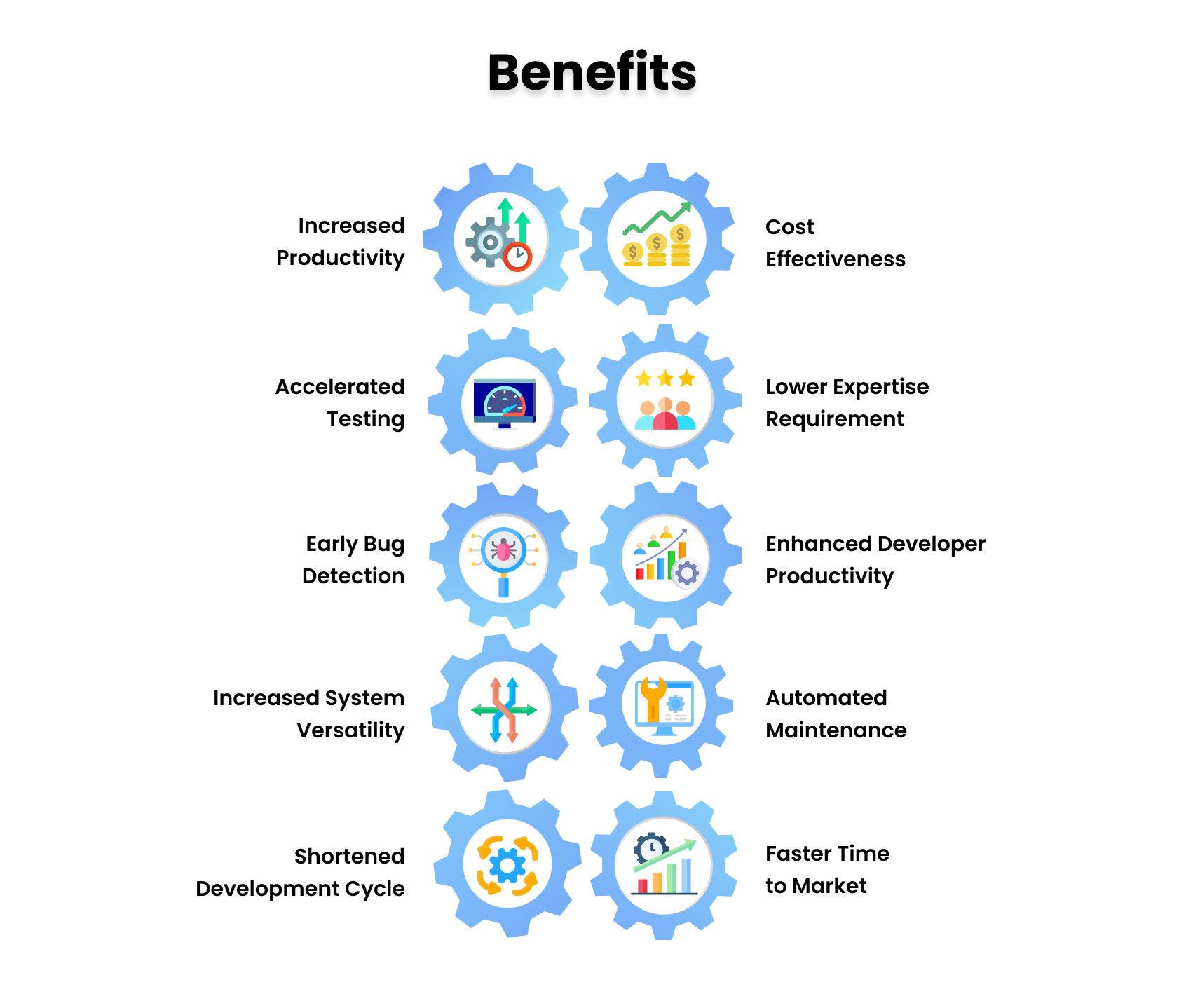

Autonomous Testing Benefits – Infographic

Autonomous testing ushers in a multitude of advantages that can transform the software development lifecycle:

- Increased Productivity: By automating routine test procedures, autonomous testing frees up human testers to tackle complex challenges, thereby boosting overall productivity.

- Accelerated Testing: This advanced testing methodology significantly speeds up the test cycle, enabling faster feedback and quicker iterations in the development process.

- Early Bug Detection: Autonomous systems can identify defects at an earlier stage, mitigating the risk of costly downstream corrections and ensuring a smoother development flow.

- Cost Effectiveness: Despite the initial investment, the long-term cost benefits are substantial, with savings on labor and reduced time required for manual testing.

- Lower Expertise Requirement: The technology lowers the barrier to entry, requiring less specialized expertise for test management, which broadens its accessibility within an organization.

- Enhanced Developer Productivity: Developers can focus their talents more on creating features rather than testing, as autonomous testing takes on the heavy lifting of routine checks and balances.

- Automated Maintenance: Test maintenance, often a tedious aspect of quality assurance, is streamlined through automation, thereby enhancing efficiency.

- Increased System Versatility: The adaptability of autonomous testing systems means they can easily adjust to new testing scenarios, making them suitable for a dynamic development environment.

- Shortened Development Cycle: The overall development timeline is shortened due to the efficiency of autonomous testing, allowing for rapid progression from concept to production.

- Faster Time to Market: Ultimately, the most significant advantage is the reduction in time to market for software products, as autonomous testing streamlines and expedites the entire testing phase.

Embracing autonomous testing systems in software development can revolutionize processes, making them faster, more reliable, and cost-efficient, benefiting development teams and end-users alike.

Key Factors that Affect Effectiveness of Test Automation

Test automation is a crucial practice in modern software development, but its effectiveness depends on several key factors:

- Strategy and Planning: Having a well-defined test automation strategy is fundamental. It should outline objectives, scope, and the selection of appropriate automation tools and frameworks.

- Test Selection: Not all tests are suitable for automation. Prioritize tests that are repetitive, time-consuming, and critical for your application.

- Test Data Management: Ensuring that your test data is well-managed and consistent is vital. Inadequate data can lead to unreliable test results.

- Maintainability: Automation scripts require regular maintenance to keep pace with evolving applications. Neglecting this can lead to substantial maintenance overhead.

- Skillset: A skilled automation team is crucial. Ensure that your team possesses the necessary skills in scripting, debugging, and test framework development.

- Continuous Integration: Integrating automation into your CI/CD pipeline ensures that tests run consistently with each code change, promoting early issue detection.

- Monitoring and Reporting: Implementing effective monitoring and reporting mechanisms allows you to identify and address issues promptly.

- Tool Selection: Choose automation tools that align with your application's technology stack and your team's expertise.

- Testing Environment: Ensure that your testing environment mirrors the production environment as closely as possible to reduce discrepancies.

- Collaboration: Promote collaboration between developers, testers, and automation engineers to align efforts and objectives.

Embracing autonomous testing systems in software development can revolutionize processes, making them faster, more reliable, and cost-efficient, benefiting development teams and end-users alike. The effectiveness of test automation is influenced by several key factors such as well-defined strategy and planning, judicious test selection, robust test data management, script maintainability, a skilled automation team, seamless integration into the CI/CD pipeline, effective monitoring and reporting, appropriate tool selection, a testing environment that mirrors production, and strong collaboration between all stakeholders. By acknowledging and optimizing these factors, organizations can enhance the effectiveness of their test automation efforts and improve software quality.

Measuring Success: Autonomous Testing Use Cases

Measuring the success of autonomous testing involves evaluating various aspects to determine its effectiveness in improving software development processes and product quality.

Here are four widely adopted metrics to measure the success of autonomous testing:

- Defect Count: Compare the number of defects identified through an autonomous testing system with those found using traditional methods. A lower defect count indicates more efficient and accurate defect detection, showcasing the value of autonomous testing.

- Percentage Autonomous Test Coverage of Total Coverage: Calculate the percentage of test coverage achieved autonomously in relation to the overall coverage. A higher percentage of autonomous test coverage indicates that the AI-driven process is comprehensively covering the application, which diminishes the need for manual testing.

- Cost Reduction: Assess the cost savings achieved through reduced manual testing efforts and increased efficiency. Compare the costs of implementing and maintaining autonomous testing tools with the benefits they provide.

- Useful vs. Irrelevant Results: Evaluate the ratio of relevant and actionable test results to those that might not provide valuable insights. A higher ratio of useful results showcases the precision and efficiency of autonomous testing systems in identifying meaningful issues.

- Percentage of Broken Builds: Measure the rate at which autonomous testing identifies broken builds accurately. A higher detection rate of broken builds signifies that the AI-driven testing process is efficiently identifying issues early on, thereby preventing flawed code from advancing through the development pipeline.

Through the analysis of these metrics, organizations can ascertain the efficacy of autonomous testing systems in accelerating releases, elevating product quality, and streamlining software development workflows.

Redefining Testing: AI's Autonomous Evolution

In conclusion, autonomous testing represents a transformative approach that harnesses the power of AI and ML to significantly streamline the software testing process.

By understanding its levels of autonomy, challenges, and benefits, as well as its key differentiators from traditional test automation, organizations can capitalize on the immense potential of this technology. It serves to enhance testing efficiency, accelerate development cycles, and ultimately, to deliver superior software products to the market.

As the domain of software development continues its rapid evolution, the adoption of autonomous testing emerges as a pivotal step in the pursuit of excellence in quality assurance.